Kubernetes allows to manage large clusters which are composed of docker containers. And where is large computation power there is large amount of data throughput. However, there is still a need to spread the data throughput so it can reach and utilize particular docker container(s). This is called load balancing. Kubernetes supports two basic forms of load balancing. Internal among docker containers themselves and external for clients which call you application from outside environment - just users.

For simplification, pod is a set of docker containers which are always located on the same node. Replication controller allows and guarantees scalability. The last but not least is the service which hides all instances of pods - created by replication controller - behind a facade.

The concept of services brings very powerful system of internal load balancing.

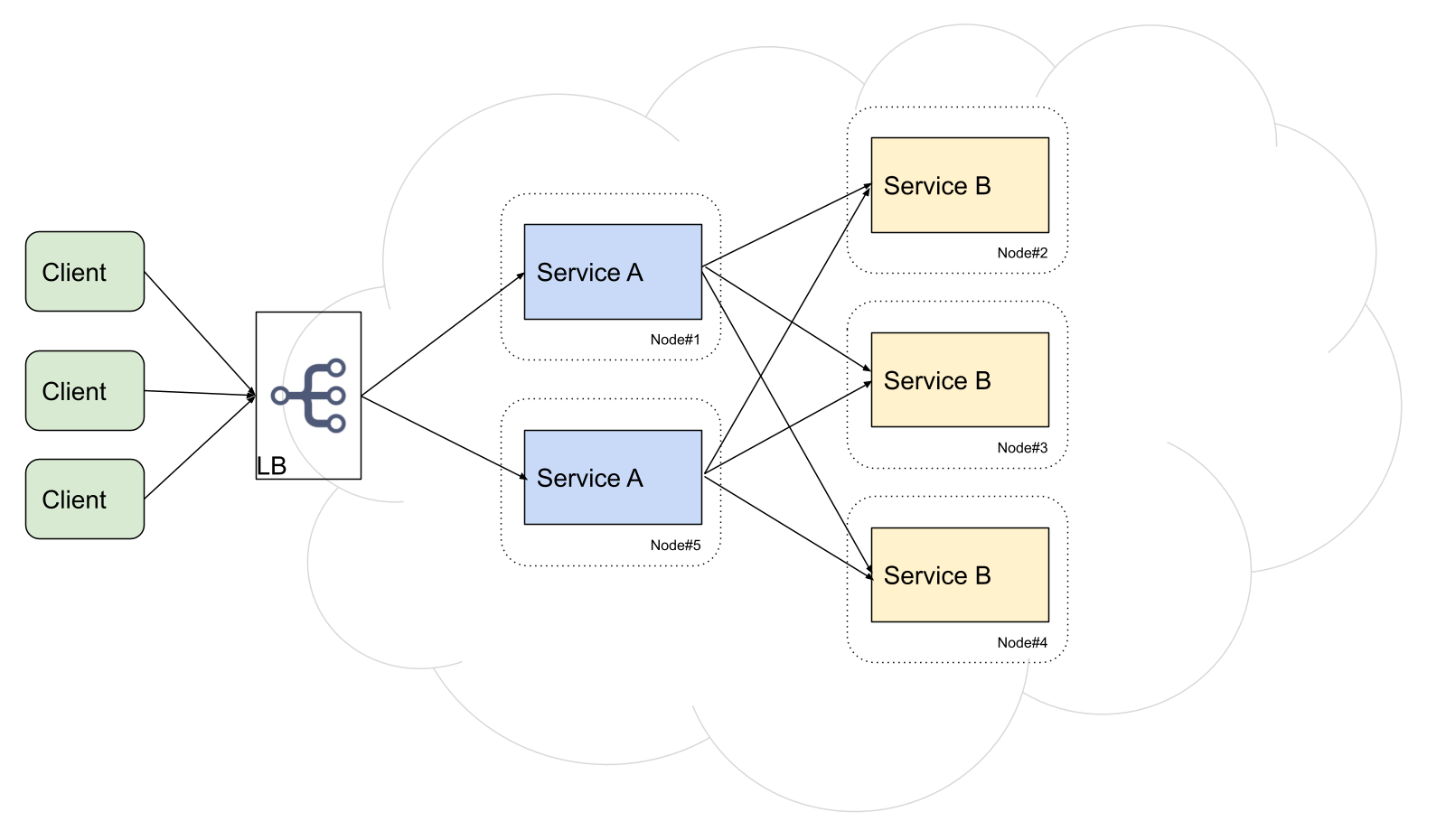

Look at the picture above. Service A uses Service B. Common pattern is to provide some service discovery so some service A can look up some instance of service B' endpoint and use it for later communication. However, service B has it's own lifecycle and can fail forever or service B can have multiple running instance. Thus, a collection of instances of service B is changing during the time.

You need some boilerplate code to handle the algorithm describe above. Ohh, wait. Kubernetes already contains similar system.

Let say, service B has 3 pods - running docker containers. Kubernetes allows you to introduce one instance of service B to hide these three pods. In advance, it gives you one virtual IP address. Service A than can use this virtual IP address and kubernetes proxying system does the routing to a specific pod. Cool isn't it?

The routing supports sticky session or round robin algorithm. It depends. The last thing which is up to you is to have clever client for your service, e.g. implemented with cooperation of hystrix, so it does re-trying or handle timeouts correctly.

This type of load balancing is about routing the traffic from source clients to target proper pods. You have multiple options here.

Thus, the services support load balancing type. Kubernetes then uses cloud provider specific API to configure the load balancer.

This is the simplest way. But what are your other options if you are not so lucky and you can't do that? You have to deploy your own load balancer. Let's review existing load balancers which can be deployed on premise.

Kube-proxy does exactly what we want but it's very simple tcp load balancer - L4. I've detected some failures during some performance testing from external clients. The proxy just sometimes refused the connection or did not know target address of particular host and so on. However, there is no way how to configure kube-proxy, e.g. to setup some timeouts. Or there is also no way - as it's TCP load balancer - how to do re-tries on http layer.

Kube-proxy also suffers by performance issues but Kubernetes 1.1 supports native IP tables so huge progress has been made during the recent time.

These all are reasons why I recommended to have clever service client within the internal load balancing section at the beginning. So I would still recommend to use kube-proxy as an internal load balancer but use some solid external load balancer implementation for external load balancing.

Ingress is a new version of kubernetes resource. It's API which allows you to define certain rules where to send a traffic. For example you can re-route a traffic from path http://host/example to a service called example. It's pretty simple.

Ingress itself is not load balancer. It's just a domain model and a tool to describe the problem. Thus there must be a service which absorbs this model and which does the load balancing but Ingress just helps you as a abstraction. Kubernetes team already developed ha-proxy implementation for this load balancing service.

There is a kubernetes java client from fabric8, you can use it to watch the kubernetes API and it's then up to you which existing load balancer you want to leverage and which kind of config file you will generate. However, as it's simple there are already some projects which do the same:

Internal Load Balancing with Kubernetes

Usual approach during the modeling of an application in kubernetes is to provide domain models for pods, replications controllers and services. Look at the great documentation if you are not aware of all these principles.For simplification, pod is a set of docker containers which are always located on the same node. Replication controller allows and guarantees scalability. The last but not least is the service which hides all instances of pods - created by replication controller - behind a facade.

The concept of services brings very powerful system of internal load balancing.

Look at the picture above. Service A uses Service B. Common pattern is to provide some service discovery so some service A can look up some instance of service B' endpoint and use it for later communication. However, service B has it's own lifecycle and can fail forever or service B can have multiple running instance. Thus, a collection of instances of service B is changing during the time.

You need some boilerplate code to handle the algorithm describe above. Ohh, wait. Kubernetes already contains similar system.

Let say, service B has 3 pods - running docker containers. Kubernetes allows you to introduce one instance of service B to hide these three pods. In advance, it gives you one virtual IP address. Service A than can use this virtual IP address and kubernetes proxying system does the routing to a specific pod. Cool isn't it?

The routing supports sticky session or round robin algorithm. It depends. The last thing which is up to you is to have clever client for your service, e.g. implemented with cooperation of hystrix, so it does re-trying or handle timeouts correctly.

External Load Balancing using Kubernetes

This type of load balancing is about routing the traffic from source clients to target proper pods. You have multiple options here.

Buy AWS/GCE Load Balancer

The load balancing and the networking itself is difficult system. Sharing public IPs, protocols to resolve hostnames to IPs, to resolve IPs to mac addresses, HA of LB and so on. It makes sense to buy load balancer as a service if you deploy your app in some well known cloud provider.Thus, the services support load balancing type. Kubernetes then uses cloud provider specific API to configure the load balancer.

This is the simplest way. But what are your other options if you are not so lucky and you can't do that? You have to deploy your own load balancer. Let's review existing load balancers which can be deployed on premise.

Kube-proxy as external load balancer

I've already described the mechanism how kubernetes supports internal load balancing. Yeah! Is it possible to use it for external load balancing? Yes but there are some limitations.Kube-proxy does exactly what we want but it's very simple tcp load balancer - L4. I've detected some failures during some performance testing from external clients. The proxy just sometimes refused the connection or did not know target address of particular host and so on. However, there is no way how to configure kube-proxy, e.g. to setup some timeouts. Or there is also no way - as it's TCP load balancer - how to do re-tries on http layer.

Kube-proxy also suffers by performance issues but Kubernetes 1.1 supports native IP tables so huge progress has been made during the recent time.

These all are reasons why I recommended to have clever service client within the internal load balancing section at the beginning. So I would still recommend to use kube-proxy as an internal load balancer but use some solid external load balancer implementation for external load balancing.

Kubernetes Ingress

New version 1.1 of the system introduced ingress feature. However, it's still beta.Ingress is a new version of kubernetes resource. It's API which allows you to define certain rules where to send a traffic. For example you can re-route a traffic from path http://host/example to a service called example. It's pretty simple.

Ingress itself is not load balancer. It's just a domain model and a tool to describe the problem. Thus there must be a service which absorbs this model and which does the load balancing but Ingress just helps you as a abstraction. Kubernetes team already developed ha-proxy implementation for this load balancing service.

Service Load Balancer

There is a project which exist from earlier kubernetes times called service load balancer. It's under development for a year. It's probably predecessor of Ingress. The load balancer also uses two levels of abstraction and any existing load balancer can stand as an implementation, ha-proxy is the only current implementation.Vulcand

Vulcand is a new load balancer. It's technology is compatible as the base configuration is stored within etcd - this allows to utilize watch command. Note that vulcand is http-only load balancer, tcp belongs among requested features. It's also not integrated with kubernetes but here is a simple tutorial.Standalone HAProxy

In fact, I have finally ended with developing of my own load balancer component which is based on existing system. It's pretty easy.There is a kubernetes java client from fabric8, you can use it to watch the kubernetes API and it's then up to you which existing load balancer you want to leverage and which kind of config file you will generate. However, as it's simple there are already some projects which do the same:

- http://www.dasblinkenlichten.com/kubernetes-101-external-access-into-the-cluster/

- https://hub.docker.com/r/zymlabs/kubernetes-endpoint-proxy/