I have liked and preferred LINQ queries over simple foreach command. Mentioned performance issue was based on the following query.

Entity e = entities.Where(e => e.Id.Equals(id)).FirstOrDefault();

Our list contains few items, for the worst case the only one. It's necessary to make the search because there can be obviously more items. I was surprised by results raised from simple test which is attached at the bottom of current article.

Test simply inserts 1, 50 and finally 100 unique entities to the list. Each entity holds only string identificator (UUID). Each listed algorithm try to find entity placed at the end.

I have tried these approaches:

- LINQ query

- simple foreach

- map having entity id as key

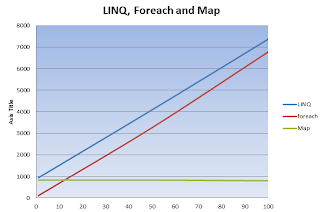

The result is very interesting as attached table and chart show. The map was included only for the confrontation, it obviously doesn't support any querying.

Conclusions: LINQ, foreach or dictionary?

It seems that querying above IList with using LINQ spent significant time to initialize the whole technology. LINQ query can be almost 10x worst than simple foreach for small amount of data. Note that for the described issue, the map is total winner because it has constant search response.

Reject LINQ? No!

The results are not so bad for LINQ as it can be seen. Consider that boundary 7 second is for one million of calls in the case of 50 items within list. So LINQ takes 7 microseconds for one search, foreach six. There is also almost no difference in absolute relations when there is small amount of items within list. LINQ 0.9 microsecond, foreach 0.1 micro. I believe that LINQ can be rejected only for very performance tuned solutions.

0 comments:

Post a Comment