NHibernate provides many approaches how to query the database:

First three of these querying methods define the body of query in the other than native SQL. It implies that NHibernate must transform these queries into native SQL - according to given dialect, e.g. into native MS SQL query.

If you really want to develop all-times fast application the described process can present unpleasant behavior. How to avoid query compilation?

Compiled named queries

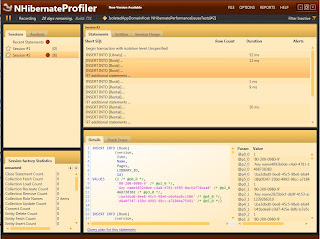

Its ridiculous but everyone met compiled (and named) query. If you have at least once browsed the log which NHibernate produced, you have had to meet similar set of lines:

When NHibernate starts (SessionFactory as spring.net bean in my case) he complies certainly usable queries. In displayed case, NHibernate knows that he'll probably search library entity in database by it's id so he'll generate native SQL query into internal cache and if the developer's code call Find for Library entity, he'll use exactly this pre-compiled select query in native SQL.

What's the main benefit? If you would call thousand times library's Find NHibernate would always has to compile the query. Instead of this inefficient behavior, NHibernate does it only once and uses pre-compiled version later.

How to speed you query? Use pre-complied named queries

As I've already written, NHibernate generates pre-compiled queries, stores them in the cache and uses them if necessary. NHibernate is the great framework, so he makes available such functionality even for you :-)

Simply you can declare list of HQL (or SQL) named queries within any mapping (hbm.xml) file, NHibernate loads the file, parse queries and preserves the pre-compiled version in his internal cache so called queries in runtime not have to be intricately parsed to native SQL, but he'll use already prepared ones.

How to use named queries?

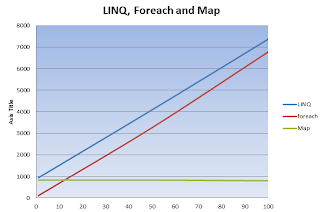

How is the speed up of named queries?

Lets say, I'll use Book.with.any.Rental query for any measures to we'll see how omitted query compilation improves test response.

I've executed the test for both named query and plain HQL. According to debug labels, plain HQL case spent 40ms by parsing of HQL to native SQL.

Note that all written till now applies only to the first call. NHibernate is tricky framework so he caches queries for you automatically when he compiles them for first time. Lets call method to get books with any rental two times (first level cache is cleared among the calls):

What are real advantages of named queries?

It doesn't seem such brilliant think to moil with writing the queries in xml file. What are real benefits?

First three of these querying methods define the body of query in the other than native SQL. It implies that NHibernate must transform these queries into native SQL - according to given dialect, e.g. into native MS SQL query.

If you really want to develop all-times fast application the described process can present unpleasant behavior. How to avoid query compilation?

Compiled named queries

Its ridiculous but everyone met compiled (and named) query. If you have at least once browsed the log which NHibernate produced, you have had to meet similar set of lines:

2010-12-13 21:26:42,056 DEBUG [7] NHibernate.Loader.Entity.AbstractEntityLoader .ctor:0 Static select for entity eu.podval.NHibernatePerformanceIssues.Model.Library: SELECT library0_.Id as Id1_0_, library0_.version as version1_0_, library0_.Address as Address1_0_, library0_.Director as Director1_0_, library0_.Name as Name1_0_ FROM [Library] library0_ WHERE library0_.Id=?

When NHibernate starts (SessionFactory as spring.net bean in my case) he complies certainly usable queries. In displayed case, NHibernate knows that he'll probably search library entity in database by it's id so he'll generate native SQL query into internal cache and if the developer's code call Find for Library entity, he'll use exactly this pre-compiled select query in native SQL.

What's the main benefit? If you would call thousand times library's Find NHibernate would always has to compile the query. Instead of this inefficient behavior, NHibernate does it only once and uses pre-compiled version later.

How to speed you query? Use pre-complied named queries

As I've already written, NHibernate generates pre-compiled queries, stores them in the cache and uses them if necessary. NHibernate is the great framework, so he makes available such functionality even for you :-)

Simply you can declare list of HQL (or SQL) named queries within any mapping (hbm.xml) file, NHibernate loads the file, parse queries and preserves the pre-compiled version in his internal cache so called queries in runtime not have to be intricately parsed to native SQL, but he'll use already prepared ones.

How to use named queries?

- Define new hbm.xml file which will include your named queries, e.g. named-queries.hbm.xml

- Place this file within such assembly which NHibernate searches for hbm mapping files. Maybe you should have to update you Fluent NHibernate configuration to search for these files. Following code will do it for you or see FluentNHibernateSessionFactory.

m.HbmMappings.AddFromAssembly(Assembly.Load(assemblyName));Now, it's time to write your named-queries.hbm.xml file. It has the following structure.

<hibernate-mapping xmlns="urn:nhibernate-mapping-2.2">

<query name="Book.with.any.Rental">

<![CDATA[

select b from Book b

where exists elements(b.Rentals)

]]>

</query>

</hibernate-mapping>How to use it?IList<Book> books = SessionFactory.GetCurrentSession().GetNamedQuery("Book.with.any.Rental").List<Book>();How is the speed up of named queries?

Lets say, I'll use Book.with.any.Rental query for any measures to we'll see how omitted query compilation improves test response.

I've executed the test for both named query and plain HQL. According to debug labels, plain HQL case spent 40ms by parsing of HQL to native SQL.

Note that all written till now applies only to the first call. NHibernate is tricky framework so he caches queries for you automatically when he compiles them for first time. Lets call method to get books with any rental two times (first level cache is cleared among the calls):

- first call took 190ms

- second one only 26ms

What are real advantages of named queries?

It doesn't seem such brilliant think to moil with writing the queries in xml file. What are real benefits?

- Speed (of the first call) - described example save 40ms of method call. It doesn't seem so much. Imagine that you are developing huge project having almost hundred queries. It can save a lot of time! You should also notice that chosen query was very simple. According to my experiences, the compilation of more complicated query takes at least 200ms. It's not small amount of time when you develop very quick application

- HQL parse for error on startup - you'll find out that your query is correct or wrong at application's startup because NHibernate do these things when he starts. You haven't wait till the call of desired query

- Clean code - you aren't mixing C# code together SQL (HQL) code

- Possibility to change query code after the application compilation - consider that you can change your HQL or SQL even if application was already compiled. You can simply expose named query hbm.xml file as ordinary xml file and you can tune your queries at runtime - means without additional compilation